SCARS Institute’s Encyclopedia of Scams™ Published Continuously for 25 Years

SCARS™ Special Report: Facebook Moderators – The Weak Link

Facebook Has Nearly 10,000 “Content Moderators” Around The World

«Extracted from The Guardian – copyright acknowledge»

Facebook Moderators: A Quick Guide To Their Job And Its Challenges From The Guardian

Though Facebook has its own comparatively small in-house moderating team, most of them work for subcontractors. There are moderating hubs around the world, but Facebook refuses to disclose their exact number or locations.

Moderators get two weeks’ training plus prescriptive manuals devised by Facebook executives based at the company headquarters in Menlo Park, California.

It is these documents that have been leaked to the Guardian.

They show the breadth of the issues being dealt with by Facebook – from graphic violence to terrorism and cannibalism. If Facebook users are talking about a controversial issue, the company has to have a policy on it to guide moderators.

Facebook has automatic systems for rooting out extreme content before it hits the site, particularly on child sexual abuse and terrorism, but its moderators do not get involved in this proactive work.

Instead, they review millions of reports flagged to them by Facebook users and use the manuals to decide whether to ignore, “escalate” or delete what they see. When they escalate a report, it usually means it is sent to a more senior manager to decide what to do.

This is particularly important when the content relates to potential suicides and self-harm, because Facebook has a team that liaises with support agencies and charities to try to get people help.

For comments that seem cruel or insensitive, moderators can recommend a “cruelty checkpoint”; this involves a message being sent to the person who posted it asking them to consider taking it down.

If the user continues to post hurtful material, the account can be temporarily closed.

The files also show Facebook has developed a law enforcement response team, which deals with requests for help from police and security agencies.

The company has designed a special page to help moderators, called the single review tool (SRT). On the right-hand side of the SRT screen, which all moderators have, there is a menu of options to help them filter content into silos.

While this has speeded up the process of moderation, the Guardian has been told moderators often feel overwhelmed by the number of posts they have to review – and they make mistakes, particularly in the complicated area of permissible sexual content.

The manuals seen by the Guardian are occasionally updated – with new versions sent to moderators. But small changes in policy are dealt with by a number of subject matter experts (SMEs), whose job is tell moderators when Facebook has decided to tweak a rule. The SMEs also oversee the work of moderators, who have to undergo regular performance reviews.

The Guardian has been told this adds to the stress of the job and has contributed to the high turnover of moderators, who say they suffer from anxiety and post-traumatic stress.

Facebook acknowledged the difficulties faced by its staff and said moderators “have a challenging and difficult job. A lot of the content is upsetting. We want to make sure the reviewers are able to gain enough confidence to make the right decision, but also have the mental and emotional resources to stay healthy. This is another big challenge for us.”

There Are Even Guidelines On Sports Match-Fixing And Cannibalism

The Facebook Files give the first view of the codes and rules formulated by the site, which is under huge political pressure in Europe and the US.

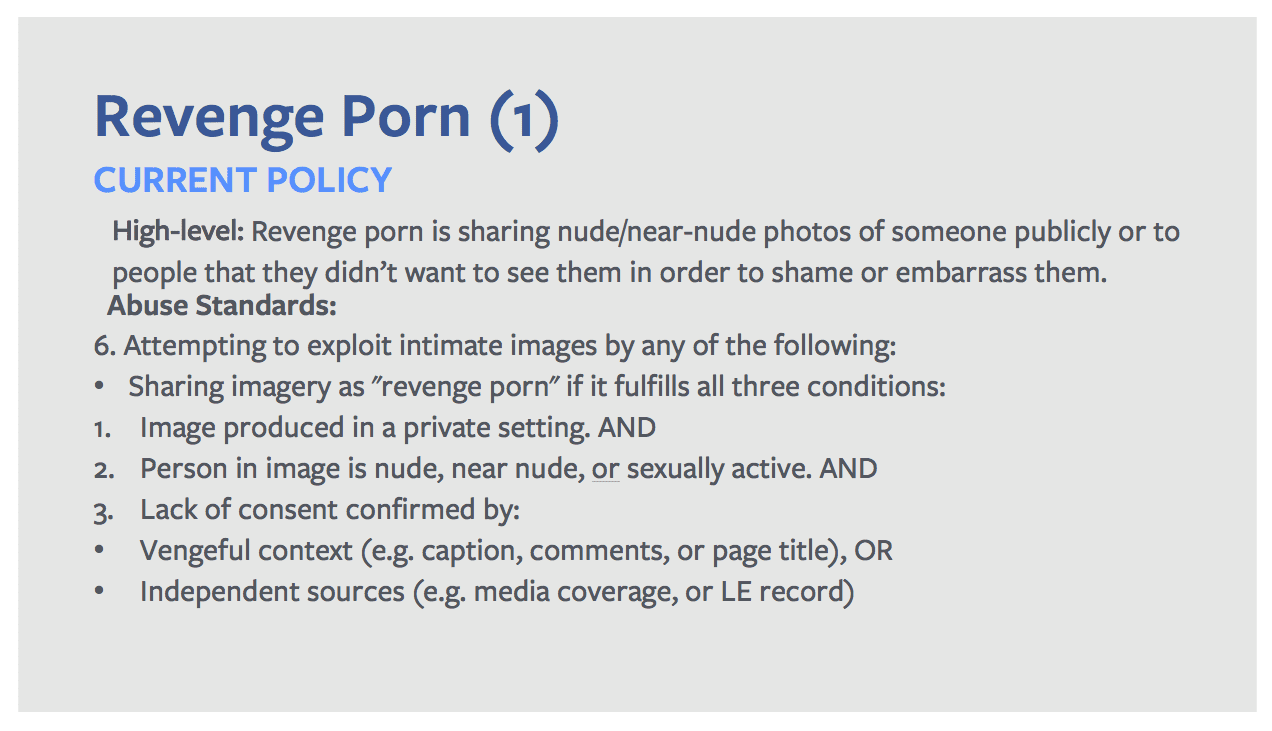

They illustrate difficulties faced by executives scrabbling to react to new challenges such as “revenge porn” – and the challenges for moderators, who say they are overwhelmed by the volume of work, which means they often have “just 10 seconds” to make a decision.

“Facebook cannot keep control of its content,” said one source. “It has grown too big, too quickly.”

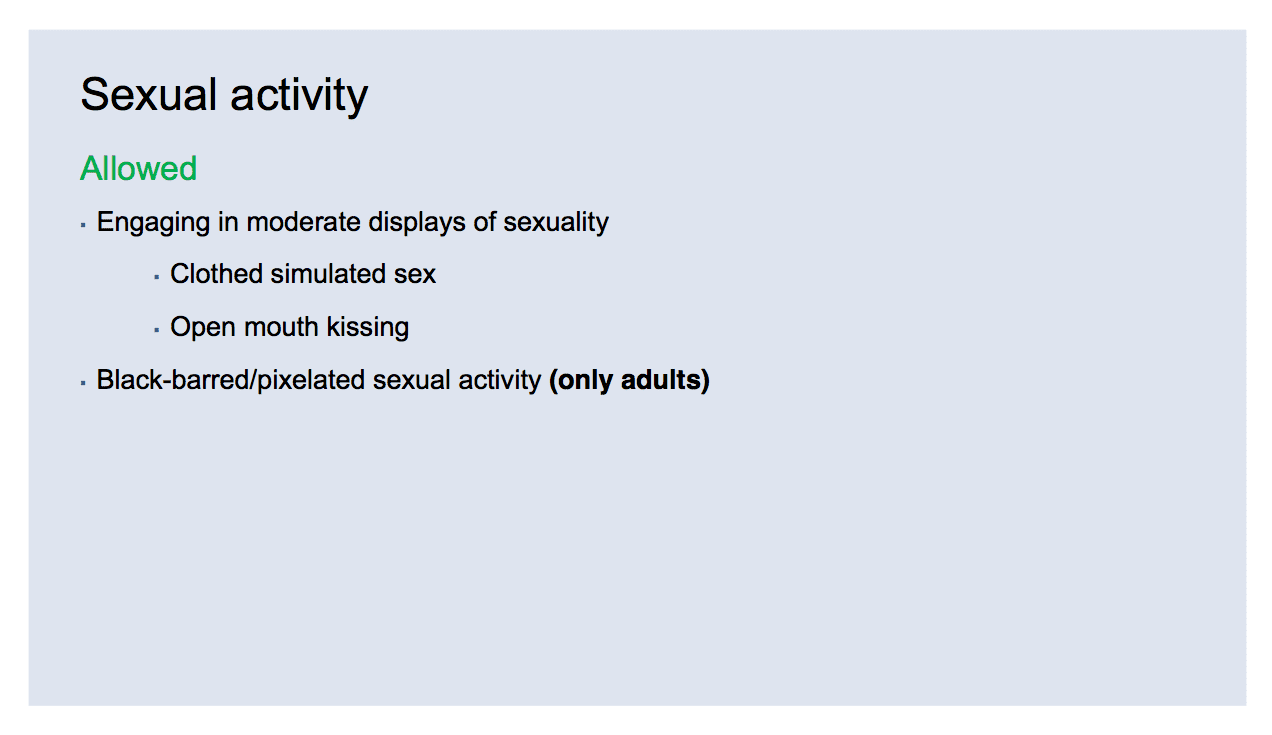

Many moderators are said to have concerns about the inconsistency and peculiar nature of some of the policies. Those on sexual content, for example, are said to be the most complex and confusing.

One document says Facebook reviews more than 6.5m reports a week relating to potentially fake accounts – known as FNRP (fake, not a real person)

Using thousands of slides and pictures, Facebook sets out guidelines that may worry critics who say the service is now a publisher and must do more to remove hateful, hurtful and violent content.

Yet these blueprints may also alarm free speech advocates concerned about Facebook’s de facto role as the world’s largest censor. Both sides are likely to demand greater transparency.

The Guardian has seen documents supplied to Facebook moderators within the last year. The files tell them:

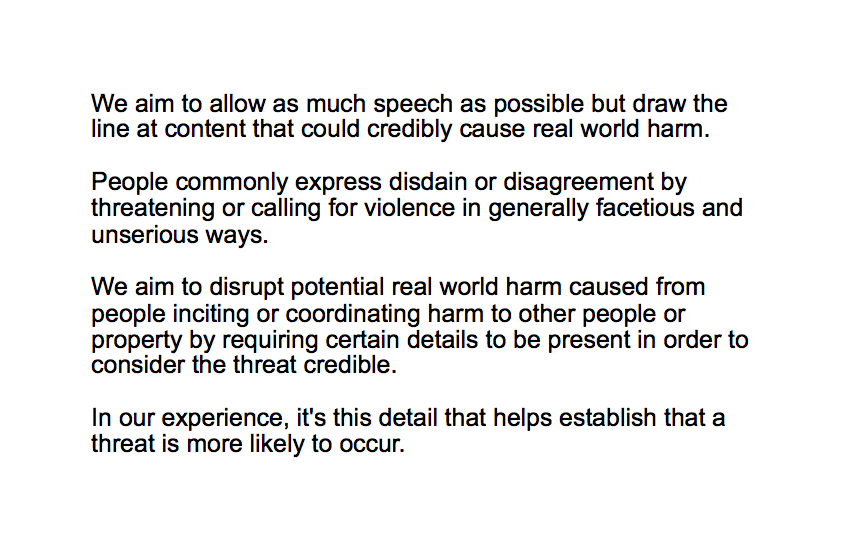

- Remarks such as “Someone shoot Trump” should be deleted, because as a head of state he is in a protected category. But it can be permissible to say: “To snap a bitch’s neck, make sure to apply all your pressure to the middle of her throat”, or “fuck off and die” because they are not regarded as credible threats. See their Threats Of Violence Training Here »

- Videos of violent deaths, while marked as disturbing, do not always have to be deleted because they can help create an awareness of issues such as mental illness.

Some photos of non-sexual physical abuse and bullying of children do not have to be deleted or “actioned” unless there is a sadistic or celebratory element.- Photos of animal abuse can be shared, with only extremely upsetting imagery to be marked as “disturbing”.

All “handmade” art showing nudity and sexual activity is allowed but digitally made art showing sexual activity is not.- Videos of abortions are allowed, as long as there is no nudity.

- Facebook will allow people to livestream attempts to self-harm because it “doesn’t want to censor or punish people in distress”.

Anyone with more than 100,000 followers on a social media platform is designated as a public figure – which denies them the full protection given to private individuals.Other types of remarks that can be permitted by the documents include: “Little girl needs to keep to herself before daddy breaks her face,” and “I hope someone kills you.” The threats are regarded as either generic or not credible.

In one of the leaked documents, Facebook acknowledges “people use violent language to express frustration online” and feel “safe to do so” on the site.

It says: “They feel that the issue won’t come back to them and they feel indifferent towards the person they are making the threats about because of the lack of empathy created by communication via devices as opposed to face to face.

“We should say that violent language is most often not credible until specificity of language gives us a reasonable ground to accept that there is no longer simply an expression of emotion but a transition to a plot or design. From this perspective language such as ‘I’m going to kill you’ or ‘Fuck off and die’ is not credible and is a violent expression of dislike and frustration.”

It adds: “People commonly express disdain or disagreement by threatening or calling for violence in generally facetious and unserious ways.”

Facebook conceded that “not all disagreeable or disturbing content violates our community standards”.

Monika Bickert, Facebook’s head of global policy management, said the service had almost 2 billion users and that it was difficult to reach a consensus on what to allow.

“We have a really diverse global community and people are going to have very different ideas about what is OK to share. No matter where you draw the line there are always going to be some grey areas. For instance, the line between satire and humour and inappropriate content is sometimes very grey. It is very difficult to decide whether some things belong on the site or not,” she said.

“We feel responsible to our community to keep them safe and we feel very accountable. It’s absolutely our responsibility to keep on top of it. It’s a company commitment. We will continue to invest in proactively keeping the site safe, but we also want to empower people to report to us any content that breaches our standards.”

She said some offensive comments may violate Facebook policies in some contexts, but not in others.

Facebook’s leaked policies on subjects including violent death, images of non-sexual physical child abuse and animal cruelty show how the site tries to navigate a minefield.

The files say: “Videos of violent deaths are disturbing but can help create awareness. For videos, we think minors need protection and adults need a choice. We mark as ‘disturbing’ videos of the violent deaths of humans.”

Such footage should be “hidden from minors” but not automatically deleted because it can “be valuable in creating awareness for self-harm afflictions and mental illness or war crimes and other important issues”.

Regarding non-sexual child abuse, Facebook says: “We do not action photos of child abuse. We mark as disturbing videos of child abuse. We remove imagery of child abuse if shared with sadism and celebration.”

One slide explains Facebook does not automatically delete evidence of non-sexual child abuse to allow the material to be shared so “the child [can] be identified and rescued, but we add protections to shield the audience”. This might be a warning on the video that the content is disturbing.

Facebook confirmed there are “some situations where we do allow images of non-sexual abuse of a child for the purpose of helping the child”.

Its policies on animal abuse are also explained, with one slide saying: “We allow photos and videos documenting animal abuse for awareness, but may add viewer protections to some content that is perceived as extremely disturbing by the audience.

“Generally, imagery of animal abuse can be shared on the site. Some extremely disturbing imagery may be marked as disturbing.”

Photos of animal mutilation, including those showing torture, can be marked as disturbing rather than deleted. Moderators can also leave photos of abuse where a human kicks or beats an animal.

Facebook said: “We allow people to share images of animal abuse to raise awareness and condemn the abuse but remove content that celebrates cruelty against animals.”

The files show Facebook has issued new guidelines on nudity after last year’s outcry when it removed an iconic Vietnam war photo because the girl in the picture was naked.

It now allows for “newsworthy exceptions” under its “terror of war” guidelines but draws the line at images of “child nudity in the context of the Holocaust”.

Facebook told the Guardian it was using software to intercept some graphic content before it got on the site, but that “we want people to be able to discuss global and current events … so the context in which a violent image is shared sometimes matters”.

Some critics in the US and Europe have demanded that the company be regulated in the same way as mainstream broadcasters and publishers.

But Bickert said Facebook was “a new kind of company. It’s not a traditional technology company. It’s not a traditional media company. We build technology, and we feel responsible for how it’s used. We don’t write the news that people read on the platform.”

A report by British MPs published on 1 May said “the biggest and richest social media companies are shamefully far from taking sufficient action to tackle illegal or dangerous content, to implement proper community standards or to keep their users safe”.

Sarah T Roberts, an expert on content moderation, said: “It’s one thing when you’re a small online community with a group of people who share principles and values, but when you have a large percentage of the world’s population and say ‘share yourself’, you are going to be in quite a muddle.

“Then when you monetise that practice you are entering a disaster situation.”

Facebook has consistently struggled to assess the news or “awareness” value of violent imagery. While the company recently faced harsh criticism for failing to remove videos of Robert Godwin being killed in the US and of a father killing his child in Thailand, the platform has also played an important role in disseminating videos of police killings and other government abuses.

In 2016, Facebook removed a video showing the immediate aftermath of the fatal police shooting of Philando Castile but subsequently reinstated the footage, saying the deletion was a “mistake”.

These show that Facebook is far from rigid or crystal clear in their training and directions to their moderators. The result is very uneven and inconsistent decision making by their moderators. Plus the fact that moderators are pressure to made decisions fast allows for no real research – they see in a glace and decide.

Given the enormous revenues that Facebook makes, we will continue to pressure the U.S. Government to demand greater levels of moderation as was done with the original MySpace more than a decade ago.

We thank the Guardian for allowing us to reprint this information for the benefit of victims of Facebook’s cybercriminals. « See more investigative reports on Facebook on The Guardian »

PLEASE SHARE OUR ARTICLES WITH YOUR CONTACTS

HELP OTHERS STAY SAFE ONLINE

SCARS™ Team

A SCARS Division

Miami Florida U.S.A.

TAGS: SCARS, Important Article, Information About Scams, Anti-Scam

CHAT WITH SCARS™ – « CLICK HERE » [icon name=”comment” class=”” unprefixed_class=”2x”]

END

MORE INFORMATION

– – –

Tell us about your experiences with Romance Scammers in our

«Scams Discussion Forum on Facebook»

– – –

FAQ: How Do You Properly Report Scammers?

It is essential that law enforcement knows about scams & scammers, even though there is nothing (in most cases) that they can do.

Always report scams involving money lost or where you received money to:

- Local Police – ask them to take an “informational” police report – say you need it for your insurance

- Your National Police or FBI « www.IC3.gov »

- The SCARS|CDN™ Cybercriminal Data Network – Worldwide Reporting Network « HERE » or on « www.Anyscam.com »

This helps your government understand the problem, and allows law enforcement to add scammers on watch lists worldwide.

– – –

Visit our NEW Main SCARS Facebook page for much more information about scams and online crime: « www.facebook.com/SCARS.News.And.Information »

To learn more about SCARS visit « www.AgainstScams.org »

Please be sure to report all scammers

« HERE » or on « www.Anyscam.com »

Legal Notices:

All original content is Copyright © 1991 – 2020 SCARS All Rights Reserved Worldwide & Webwide. Third-party copyrights acknowledge.

SCARS, RSN, Romance Scams Now, SCARS|WORLDWIDE, SCARS|GLOBAL, SCARS, Society of Citizens Against Relationship Scams, Society of Citizens Against Romance Scams, SCARS|ANYSCAM, Project Anyscam, Anyscam, SCARS|GOFCH, GOFCH, SCARS|CHINA, SCARS|CDN, SCARS|UK, SCARS Cybercriminal Data Network, Cobalt Alert, Scam Victims Support Group, are all trademarks of Society of Citizens Against Relationship Scams Incorporated.

Contact the law firm for the Society of Citizens Against Relationship Scams Incorporated by email at legal@AgainstScams.org

-/ 30 /-

What do you think about this?

Please share your thoughts in a comment below!

Table of Contents

- Facebook Has Nearly 10,000 “Content Moderators” Around The World

- Facebook Moderators: A Quick Guide To Their Job And Its Challenges From The Guardian

- There Are Even Guidelines On Sports Match-Fixing And Cannibalism

- One document says Facebook reviews more than 6.5m reports a week relating to potentially fake accounts – known as FNRP (fake, not a real person)

LEAVE A COMMENT?

Recent Comments

On Other Articles

- velma faile on Finally Tax Relief for American Scam Victims is on the Horizon – 2026: “I just did my taxes for 2025 my tax account said so far for romances scam we cd not take…” Feb 25, 19:50

- on Reporting Scams & Interacting With The Police – A Scam Victim’s Checklist [VIDEO]: “Yes, this is a scam. For your own sanity, just block them completely.” Feb 25, 15:37

- on Danielle Delaunay/Danielle Genevieve – Stolen Identity/Stolen Photos – Impersonation Victim UPDATED 2024: “She goes by the name of Sanrda John now” Feb 25, 10:26

- on Reporting Scams & Interacting With The Police – A Scam Victim’s Checklist [VIDEO]: “So far I have not been scam out of any money because I was aware not to give the money…” Feb 25, 07:46

- on Love Bombing And How Romance Scam Victims Are Forced To Feel: “I was love bombed to the point that I would do just about anything for the scammer(s). I was told…” Feb 11, 14:24

- on Dani Daniels (Kira Lee Orsag): Another Scammer’s Favorite: “You provide a valuable service! I wish more people knew about it!” Feb 10, 15:05

- on Danielle Delaunay/Danielle Genevieve – Stolen Identity/Stolen Photos – Impersonation Victim UPDATED 2024: “We highly recommend that you simply turn away form the scam and scammers, and focus on the development of a…” Feb 4, 19:47

- on The Art Of Deception: The Fundamental Principals Of Successful Deceptions – 2024: “I experienced many of the deceptive tactics that romance scammers use. I was told various stories of hardship and why…” Feb 4, 15:27

- on Danielle Delaunay/Danielle Genevieve – Stolen Identity/Stolen Photos – Impersonation Victim UPDATED 2024: “Yes, I’m in that exact situation also. “Danielle” has seriously scammed me for 3 years now. “She” (he) doesn’t know…” Feb 4, 14:58

- on An Essay on Justice and Money Recovery – 2026: “you are so right I accidentally clicked on online justice I signed an agreement for 12k upfront but cd only…” Feb 3, 08:16

ARTICLE META

Important Information for New Scam Victims

- Please visit www.ScamVictimsSupport.org – a SCARS Website for New Scam Victims & Sextortion Victims

- Enroll in FREE SCARS Scam Survivor’s School now at www.SCARSeducation.org

- Please visit www.ScamPsychology.org – to more fully understand the psychological concepts involved in scams and scam victim recovery

If you are looking for local trauma counselors please visit counseling.AgainstScams.org or join SCARS for our counseling/therapy benefit: membership.AgainstScams.org

If you need to speak with someone now, you can dial 988 or find phone numbers for crisis hotlines all around the world here: www.opencounseling.com/suicide-hotlines

A Note About Labeling!

We often use the term ‘scam victim’ in our articles, but this is a convenience to help those searching for information in search engines like Google. It is just a convenience and has no deeper meaning. If you have come through such an experience, YOU are a Survivor! It was not your fault. You are not alone! Axios!

A Question of Trust

At the SCARS Institute, we invite you to do your own research on the topics we speak about and publish, Our team investigates the subject being discussed, especially when it comes to understanding the scam victims-survivors experience. You can do Google searches but in many cases, you will have to wade through scientific papers and studies. However, remember that biases and perspectives matter and influence the outcome. Regardless, we encourage you to explore these topics as thoroughly as you can for your own awareness.

Statement About Victim Blaming

SCARS Institute articles examine different aspects of the scam victim experience, as well as those who may have been secondary victims. This work focuses on understanding victimization through the science of victimology, including common psychological and behavioral responses. The purpose is to help victims and survivors understand why these crimes occurred, reduce shame and self-blame, strengthen recovery programs and victim opportunities, and lower the risk of future victimization.

At times, these discussions may sound uncomfortable, overwhelming, or may be mistaken for blame. They are not. Scam victims are never blamed. Our goal is to explain the mechanisms of deception and the human responses that scammers exploit, and the processes that occur after the scam ends, so victims can better understand what happened to them and why it felt convincing at the time, and what the path looks like going forward.

Articles that address the psychology, neurology, physiology, and other characteristics of scams and the victim experience recognize that all people share cognitive and emotional traits that can be manipulated under the right conditions. These characteristics are not flaws. They are normal human functions that criminals deliberately exploit. Victims typically have little awareness of these mechanisms while a scam is unfolding and a very limited ability to control them. Awareness often comes only after the harm has occurred.

By explaining these processes, these articles help victims make sense of their experiences, understand common post-scam reactions, and identify ways to protect themselves moving forward. This knowledge supports recovery by replacing confusion and self-blame with clarity, context, and self-compassion.

Additional educational material on these topics is available at ScamPsychology.org – ScamsNOW.com and other SCARS Institute websites.

Psychology Disclaimer:

All articles about psychology and the human brain on this website are for information & education only

The information provided in this article is intended for educational and self-help purposes only and should not be construed as a substitute for professional therapy or counseling.

While any self-help techniques outlined herein may be beneficial for scam victims seeking to recover from their experience and move towards recovery, it is important to consult with a qualified mental health professional before initiating any course of action. Each individual’s experience and needs are unique, and what works for one person may not be suitable for another.

Additionally, any approach may not be appropriate for individuals with certain pre-existing mental health conditions or trauma histories. It is advisable to seek guidance from a licensed therapist or counselor who can provide personalized support, guidance, and treatment tailored to your specific needs.

If you are experiencing significant distress or emotional difficulties related to a scam or other traumatic event, please consult your doctor or mental health provider for appropriate care and support.

Also read our SCARS Institute Statement about Professional Care for Scam Victims – click here to go to our ScamsNOW.com website.

![SCARS™ Insight: Facebook 1 Billion Fake Profiles [Infographic] the world of facebook users 2019 1 the-world-of-facebook-users-2019 interface banner](https://romancescamsnow.com/wp-content/uploads/2019/02/the-world-of-facebook-users-2019-1.png)

Thank you for your comment. You may receive an email to follow up. We never share your data with marketers.