RSN™ Special Report: Community Standards Enforcement Preliminary Report 2018

According to Facebook:

We want to protect and respect both expression and personal safety on Facebook. Our goal is to create a safe and welcoming community for the more than 2 billion people who use Facebook around the world, across cultures and perspectives.

To help us with that goal, our Community Standards define what is and isn’t allowed on Facebook. We don’t allow anything that goes against these standards, and we invest in technology, processes and people to help us act quickly so standards violations affect as few people as possible.

People may intentionally or unintentionally act in ways that go against our standards. We respond differently depending on the severity, and we may take further action against people who repeatedly violate standards. In some cases, we also contact law enforcement to prevent real-world harm.

About Facebook’s Report

Facebook regularly get requests for information about how effective they are at preventing, identifying and removing violations and how their technology helps these processes.

They claim that they want to communicate details about this work and how it affects their community. They have shared preliminary metrics in this report to help people understand how they are doing at enforcing our Community Standards. Of course, this is purely a defensive move as Facebook is under siege for so many abuses and lapses in their management of their platform.

In this report, being the first they have published about their ongoing Community Standards enforcement efforts, is just a first step – so they say.

They say and is probably correct that they are still refining their internal methodologies for measuring these efforts and expect these numbers to become more precise over time. This report covers the period October 2017 through March 2018 and includes metrics on enforcement of:

- Graphic Violence

- Adult Nudity and Sexual Activity

- Terrorist Propaganda (ISIS, al-Qaeda and affiliates)

- Hate Speech

- Spam

- Fake Accounts (which is what we primarily care about)

Facebook claims that they continually improve how they internally categorize the actions they take to enforce their community standards. This involves consistently categorizing the violation types for actions they take against content and accounts. Being “better at categorizing these actions will help them refine their metrics” they state. As they do this, we hope that they will continuously expand reporting on enforcement of additional standards in future versions of their reports.

The Facebook Approach To Measurement

With this report, they say they aim to answer 4 questions for enforcement of each Community Standard.

1: How Prevalent Are Community Standards Violations On Facebook?

We consider prevalence to be a critical metric because it helps us measure how many violations impact people on Facebook. We measure the estimated percentage of views that were of violating content. (A view happens any time a piece of content appears on a user’s screen.) For fake accounts, we estimate the percentage of monthly active Facebook accounts that were fake. These metrics are estimated using samples of content views and accounts from across Facebook.

2: How Much Content Does Facebook Take Action On?

We measure the number of pieces of content (such as posts, photos, videos or comments) or accounts we take action on for going against standards. “Taking action” could include removing a piece of content from Facebook, covering photos or videos that may be disturbing to some audiences with a warning, or disabling accounts.

3: How Much Violating Content Does Facebook Find Before Users Report It?

This metric shows the percentage of all content or accounts acted on that we found and flagged before users reported them. We use detection technology and people on our trained teams (who focus on finding harmful content such as terrorist propaganda or fraudulent spam) to help find potentially violating content and accounts and flag them for review. Then, we review them to determine if they violate standards and take action if they do. The remaining percentage reflects content and accounts we take action on because users report them to us first.

4: How Quickly Does Facebook Take Action On Violations?

Facebook says this #4 Metric not yet available

We try to act as quickly as possible against violations to minimize their impact on users. One way we’re considering answering this question is by measuring views before we can take action. We’re finalizing our methodologies for how we measure this across different violation types. We’ll make these metrics available in future versions of this report.

We are going to focus on Fake accounts and disregard the other categories in this article.

Fake Facebook Accounts

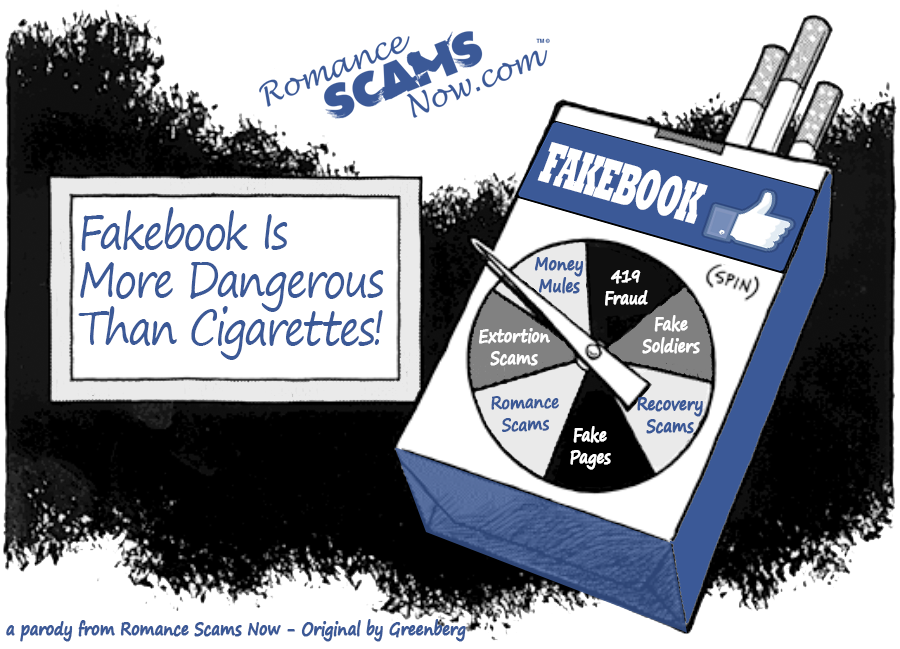

According to Facebook: “Individual people sometimes create fake accounts on Facebook to misrepresent who they are.” Really? However, in most cases, bad actors try to create fake accounts in large volumes automatically using scripts or bots, with the intent of spreading spam or conducting illicit activities such as scams.

Because they’re often the starting point for other types of standards violations, they claim they are vigilant about blocking and removing fake accounts.

They say “Getting better at eliminating fake accounts makes us more effective at preventing other kinds of violations. Our detection technology helps us block millions of attempts to create fake accounts every day and detect millions more often within minutes after creation. We don’t include blocked attempts in the metrics we report here.” Great, thank’s Facebook!

How Prevalent Are Fake Accounts On Facebook According To Facebook?

Facebook Says:

?We estimate that fake accounts represented approximately 3% to 4% of monthly active users (MAU) on Facebook during Q1 2018 and Q4 2017. We share this number in the Facebook quarterly financial results. This estimate may vary each quarter based on spikes or dips in automated fake account creation.”

This is inconsistent with Facebook’s own quarterly reports where it is closer to 8%. SCARS Analysis is that it is closer to 20% = 20% would be 400 Million Fake Profiles.

How Many Fake Accounts Does Facebook Say They Take Action On?

What Does Facebook Measure?

Facebook claims that they measure how many Facebook accounts they took action on because they determined they were fake. Facebook says this number reflects fake accounts that get created and then are disabled. It doesn’t include attempts to create fake accounts that we blocked.

What The Numbers Mean

According to Facebook: “These numbers are largely affected by external factors, such as cyberattacks that increase fake accounts on Facebook. Bad actors try to create fake accounts in large volumes automatically using scripts or bots, with the intent of spreading spam or conducting illicit activities such as scams. The numbers can also be affected by internal factors, including the effectiveness of our detection technology.”

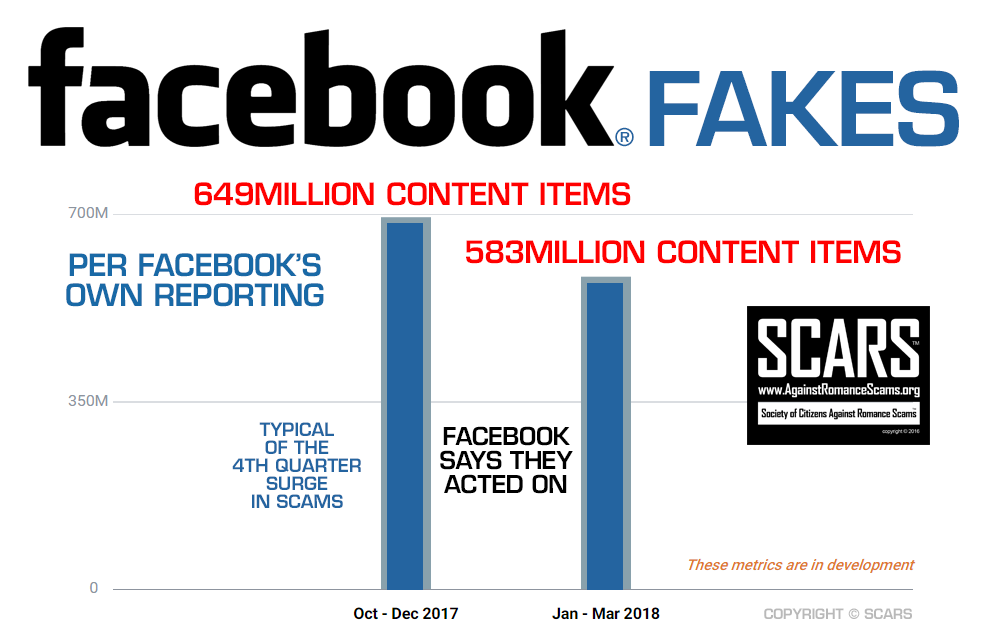

In Q1 2018, they say the disabled 583 million fake accounts, down from 694 million in Q4 2017. We suspect that the number of fake accounts did not go down, but rather the chaos with their moderators is resulting in lower effectiveness.

Notice that Facebook says that there are between 3% to 4% fake accounts, yet by the numbers below it peaks at almost 35% – what are we to believe?

Facebook says that these metrics can vary widely (what does that mean?) for fake accounts acted on, driven by new cyberattacks and the variability of our detection technology’s ability to find and flag them. The decrease in fake accounts disabled between Q4 and Q1 is largely due to this variation. What the really means is they really are guessing how effective they are and how many fake accounts there are.

How Many Fake Accounts Does Facebook Claim They Find Before Users Report Them?

What They Say They Measure

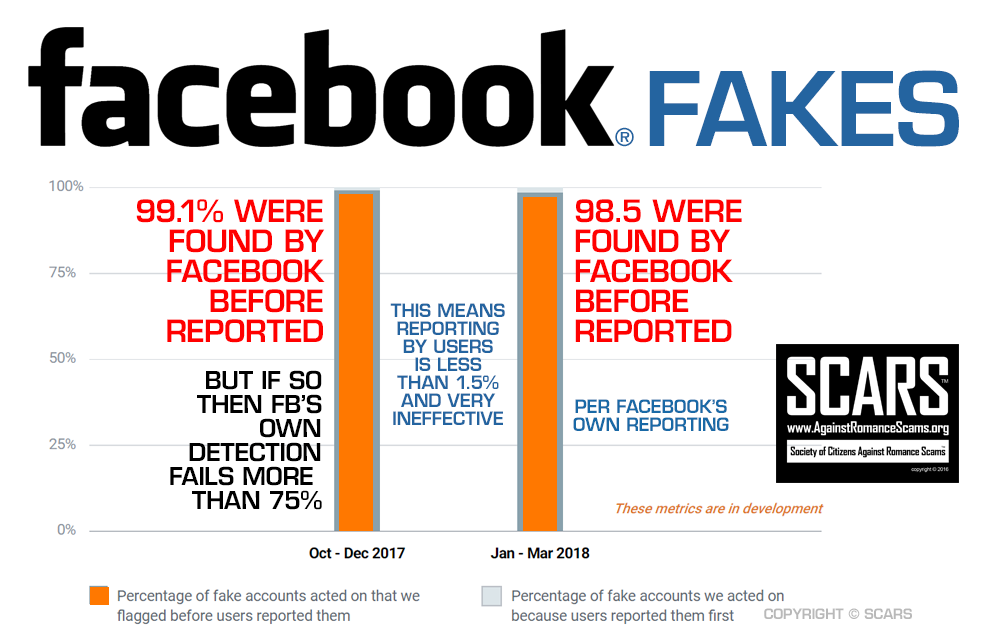

This metric shows the percentage of accounts Facebook took action on that it found and flagged before users reported them. They claim it was 99.1% This percentage is calculated as: the number of fake accounts acted on that they found and flagged before users reported them, divided by the total number of fake accounts we acted on. This number reflects fake accounts that get created and then are disabled or acted on. It doesn’t include attempts to create fake accounts that we blocked.

Notice that there is no reporting of “false positives” – in other words, real people it thought were fakes and acted upon.

Also, it does not reflect the overall number of fakes that were reported but disregarded which we estimate to be over 80%.

What The Numbers Mean According To Facebook?

Facebook says that “these numbers change based on how our detection technology help us find potential fake accounts, review them and remove them.” But the sad truth is that they do not know what they don’t know – there could be many times more fakes (and probably are) that their detection does not see.

In Q1 2018, they say they found and flagged 98.5% of the accounts they subsequently took action on before users reported them. They acted on the other 1.5% because users reported them first. This number decreased from 99.1% in Q4 2017. Maybe the number decreased because they are not listening to the reports by users as much – in other words, automatically disregarding most of them since their moderators only have 10 seconds to decide if a profile is fake!

Once again, Facebook claims that their metrics can vary widely for fake accounts acted on, as explained above. The decrease in the percentage of fake accounts found and flagged between Q4 and Q1 is largely due to this variation. Against, they have no clue!

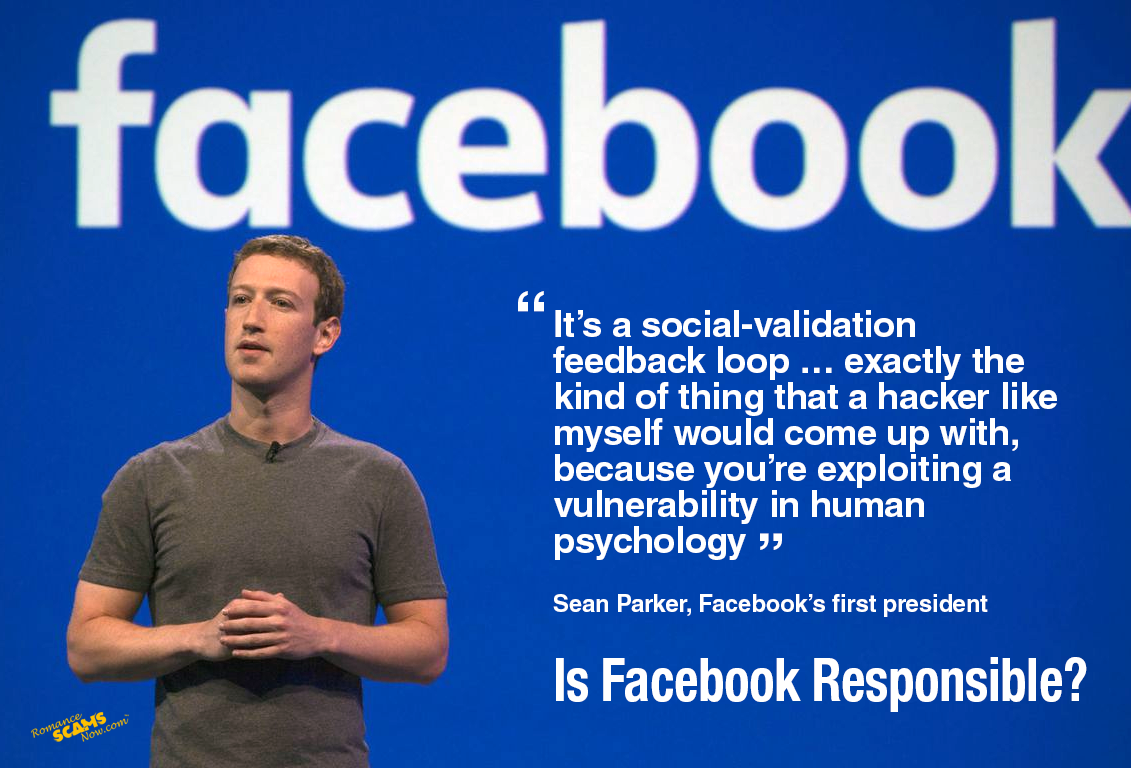

What Does This Tell Us?

It Tells Us That:

- Facebook’s technology is a closed loop that cannot see beyond itself – meaning they do not know what they cannot see

- Facebook’s reporting is wildly inconsistent and fails to take advantage of its real resource in users to help it make determinations about fakes.

- Facebooks variability is so broad as to make the numbers almost meaningless – they just paint a pretty picture for Congress!

For Facebook To Be Truly Transparent It Needs To Include Additional Metrics:

- Reported Fakes disregarded by moderators

- Average time spent by moderators on fake profile examination

- Total number of fakes reported to Facebook

- The average time between the time of the report and when a moderator reviewed the profile

- What actions were taken by Facebook on these fake profiles

In short, this is a systemic failure on Facebook’s part. No international quality standard would accept such a process.

A recent Facebook experience: I received a friend recommendation from Facebook a while ago. The name of this “friend” was a person that scammed me. When I looked at this Facebook account, I searched the picture on Tineye and it came back as a profile on a French dating site. (obviously stolen) I reported this Facebook account to Facebook but they replied that no action would be taken and that I should just block.

This is a major fault of Facebook, that it takes sometimes 25 reports before they act.